AI vs. Creative Industry: Will Robots Steal All the Fun Jobs?

AI as both concept and tool has grown from a highly technical, niche background into the hands of the public, quickly becoming a very hot topic. The increasing number of AI models available to creative industries raises questions about utility, plagiarism, and what role it could play in shaping our future. For today’s discussion, we’re focusing on three categories of its application: text-to-image generators like Midjourney and Dall-E, language processors like ChatGPT and all its incarnations, and workflow automation software like RunwayML.

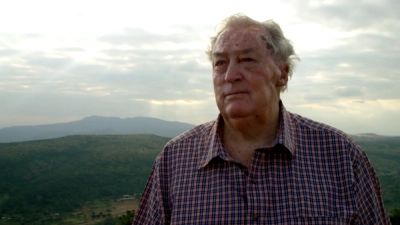

David Conklin is a motion designer and creative technologist at Trollback+Company. As a creative coder, he explores how emerging platforms and technologies help us understand, visualize, and interact with the world in new ways. He sits down with Lili Emtiaz, an art director with 10 years experience in branding, motion design, logo design, and creative illustration in media, culture, and entertainment.

Lili: So David, as a designer, how do you see these text-to-image generators being used in our day-to-day workflow?

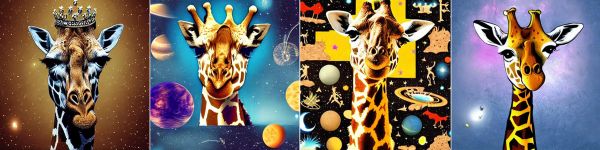

David: You know, I see them as kind of supplementing the design process as it already exists. So much of what we do as designers is gathering references and looking at work that’s out there in the world, or trying to quickly visualize an idea that we can't quite make ourselves. When you look at things like Midjourney, there’s huge potential for reference-gathering and sketching out loose concepts and ideas. Just as you wouldn't use a tear page in your final product, you wouldn't take one of these images produced by AI and deliver it as is. But I think along the way, as inspiration or reference, it's going to be a game changer, because suddenly now you can just say “I want a giraffe wearing a crown, you know, in outer space” and you don't have to find some obscure reference, you just get it right. So that's going to be helpful.

L: That's an interesting take. Everyone has been so quick to jump from creation to delivery, there hasn’t been much discussion about how it truly does mirror an internal team’s current process.

D: That's the way that I see it. I mean, it's so hard to predict how it will go. But I think yeah, it will supplement the process. Because ultimately it is other people's work. You can't use other people's work and say that it's your own whether you copy/paste from a Google search, or you have an AI system that's trained on this model generating it for you. That being said, we use images we don't have rights to all the time in the design phase, when we share our references with clients. I would just hope that anything in the final product would not be directly created by an AI model.

L: It's almost like a smarter Pinterest.

D: Yeah, exactly.

L: There are AI models that you can train with your own work, which circumvents copyright issues. Is that something you could potentially see being used in our workflow for something like rounding out a campaign?

D: I think, yes. There are generative, specific algorithms that are meant to take an incomplete set and create the missing elements. So if I have a campaign ad, and I need to make that ad in 16X9, but then ripple it out to a bunch of different formats, a bunch of different durations, and a bunch of frame rates, I can definitely see having AIs that are capable of doing that. Or, you know, it could be interesting, a world where you train AI on all of Trollbäck’s work, and then you can kind of just use that to spitball.

L: It's almost like a branded AI in that sense, because it's still coming from us. If another studio were to try it, they'd be getting different results according to their style.

D: And different results based on which underlying model they used, and what training data they fed it. And then there's the question: it's our work, but is it our code? So then who actually owns it?

L: So that's an interesting segue. To some degree, visual creative ownership is more black and white than something like code. Non-programmers are using language processors, such as Chat GPT, to just write code for them. People seem to be more okay with that versus a narrative story. Do you think that is like an inherent bias in people?

D: I spend a lot of time thinking about the question of what constitutes creative work. Where’s that invisible line that separates something technical from something conceptual? I think we all can subconsciously differentiate between the two, but it’s really hard to put into words exactly how they contrast. I think in your example, a few lines of code used as an expression in After Effects is such a tiny piece of a much larger project that it’s not “helpful” without your own creative input (you need a layer to put it on), whereas asking ChatGPT to write a story from scratch is “helpful” on its own, (you could just send it to a client). I think we can think about it as the same as copy-pasting a snippet from StackOverflow versus downloading an animation, putting a vignette on it, and calling it your own.

I think tasks that fall into the first category (like rigging, rotoscoping, tracking, denoising, set extension) will welcome automation far more openly than those that fall into the second.

L: And this seems true for both visual and written content generated by AI, yeah?

D: I think there's less of a distinction between Text-to-Image (Midjourney) and text-to-text (ChatGPT) models in terms of their underlying technology.

In the same way you could use Midjourney to create a texture that you then apply to your own 3D model, you can use chatGPT to write an expression [for After Effects]. Similarly, you could also ask Midjourney to create a whole 3D scene for you or chatGPT to write you an entire novel.

It's not that images are inherently more creative than text, but that we as humans decide where our own ethical line is between utility and plagiarism. We instinctively know when we're "cheating" at creative work, versus leveraging a tool to help us on our journey.

L: Yeah, and nobody calls rotoscoping an art form, but rather one of their many skill sets. However, it is certainly a large part of certain people’s careers. Could you talk more about what these workflow automation tools will do to our workflows?

D: I mean, we're already using them now on pretty much every project in some form or another. The big ones that I can think of are Topaz Labs has a great upscaler called Gigapixel, which you can basically feed a 2K image and have it spit out something much larger. We fed it a 10k image, it had to spit out a 30k image, and things like that. Which again, in the past, you would have had to hire an artist to come and up rez that and paint out all the artifacts, or spend thousands of dollars tile-rendering this on some farm. Now you just have an AI that upscales it for you. And ditto with the roto thing, even if you could only get to, you know, 80% of the roto, but you can get it done in one click. That's a huge help. And so I definitely see those tasks that fall below that creativity threshold – that are considered more rote and repetitive – leveraging AI systems more heavily. I think artists will spend less time doing that stuff, and I think that’s interesting: what are the long-term implications of that? We were talking the other day about how much the early days of the Internet and being on MySpace and having to kind of mess with the code and play with the guts of the computers, how much that informed the way that we exist online and create art now, right? I wonder what happens when you have a whole generation of artists that has never learned the process of rotoscoping and has never learned the process of these more rote, repetitive tasks. And like, maybe it'll be cool, because they're just more focused on creativity. Or maybe they'll be, you know, lacking those kinds of fundamental rite-of-passage processes.

L: Even looking at Photoshop today—I remember being in school and taking the Pen Tool, and slowly cutting out something from the background. My teacher at the time worked for magazines and told us that cutting people out was about 80% of his career. Now you go into Photoshop, hit “Select Subject,” and the background suddenly just disappears.

D: Yeah. I think there's a romanticized view of creativity, where all you do as an artist is sit there with your arms crossed and tell the computer what to do. Simply thinking in your head, “make this!” – I would love to get to that future. I think we've got a long period between now and then when it's going to be kind of a gray area, figuring out how much of this is the artist’s? How much of this is the AI? How much of this is the developer? How much of this is the training set? Right? And how do we account for who should get paid? Who should claim ownership and all the rest of that? So I think that's kind of where we are. And I'm excited and terrified to see what happens.

L: You've kind of already given this answer, but just to ask more directly: what do you think AI will do in terms of expectations from clients and internal teams?

D: It's a good question. I think the fear of a world where you can just sit there and art-direct a computer is that all of a sudden your clients will want everything under the moon, right? Because out will go budgets and timelines, you can just generate infinite images and things like that. And I mean, I think that that is ultimately true. But no matter what any of these systems output, the benchmark is always going to be a human as to whether the work is acceptable or not. There's always going to be a bottleneck of humans approving things at some level. And so I don't think that AI will necessarily transcend that limit, I think that humans will ultimately always need to say, “This is good,” or “this is bad,” or “this needs to be changed.” But I do think, for instance, now, we do motion tests for almost everything right at the beginning. That wasn’t always the case; for a while, the computers weren't really powerful enough to do animation if you weren’t paying for it. And so I think we might end up in a world where we are delivering higher fidelity things more quickly, but not necessarily in a much, much larger volume.

L: Well, I'm excited for the future that you are painting.

D: I mean, I hope it goes that way. And not the Elon Musk way of world domination.

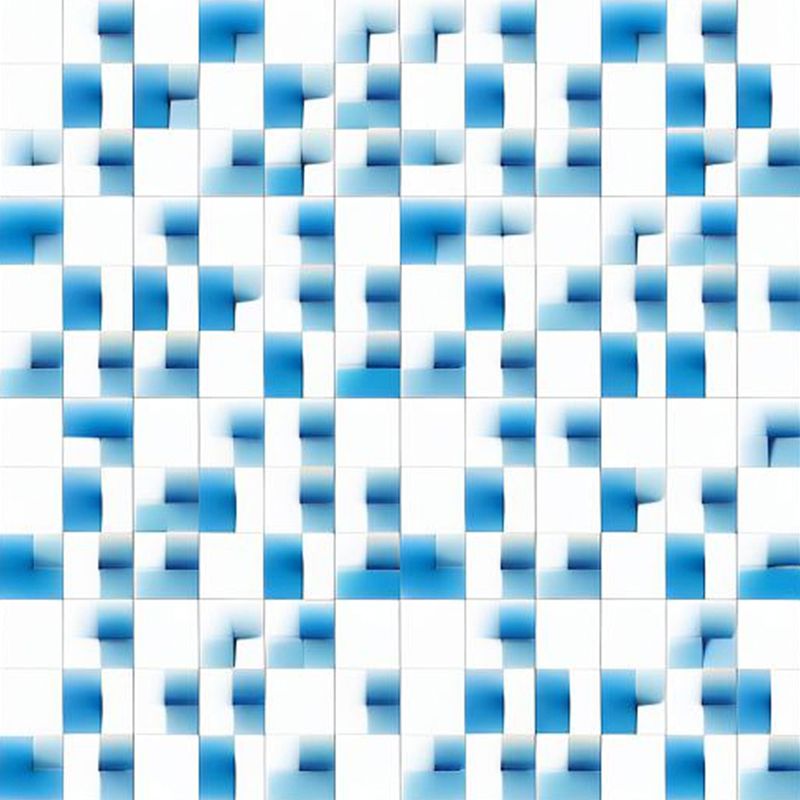

Today’s interview was transcribed by Otter.ai. The article was named by Chat GPT. The same algorithm from RunwayML created both the giraffe and Trollbäck images, each using different training models – the giraffe images were created with the fantasy preset provided, whereas the Trollbäck images were created with images supplied to RunwayML from the Trollbäck archives.

Explore more

New business inquiries.

How can we help?

NYC 11:05

STK 05:05

LA 08:05

Get our newsletter

Sign up for updates,

insights, and inspirations

from our studio.